The Split No One Expected

Our research asked 10 bicycle questions every day from October 6th to October 20th 20, 2025. That's 100 data requests per query per day.

Some questions triggered zero web searches. Ever.

"At what age should a child begin to learn a bike?" got 0% search probability across all 15 days. ChatGPT treats this as locked-in knowledge. No validation needed.

That's fine and that was expected.

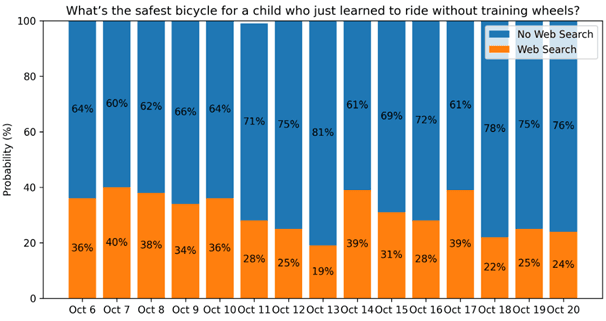

Now look at this: "What's the safest bicycle for a child who just learned to ride without training wheels?" This one jumped from 40% search probability to 19% in five days.

Safety questions? One day the AI feels confident. The next day it's searching the web for answers. If you're running AI customer service for an e-commerce store, this could be an operational risk.

The 50% Ceiling

Our main finding: no question averaged above 50% search probability.

ChatGPT defaults to internal knowledge for product guidance. It only searches when confidence drops. But even then, it stays under 50%. That suggests the model has built-in guardrails. Maybe to prevent over-reliance on external data. Maybe to manage costs.

We'll never know.

Product comparison queries showed moderate swings. "What is the difference between a 20-inch and 24-inch bike for kids?" You'd expect stability here. These are measurements, not opinions. But search probability varied anyway.

The model treats age-specific recommendations differently by cohort. A seven-year-old's bike query behaves differently than a five-year-old's. And there's no pattern we can see.

When Stable Becomes Volatile Overnight

This is where it gets intersting.

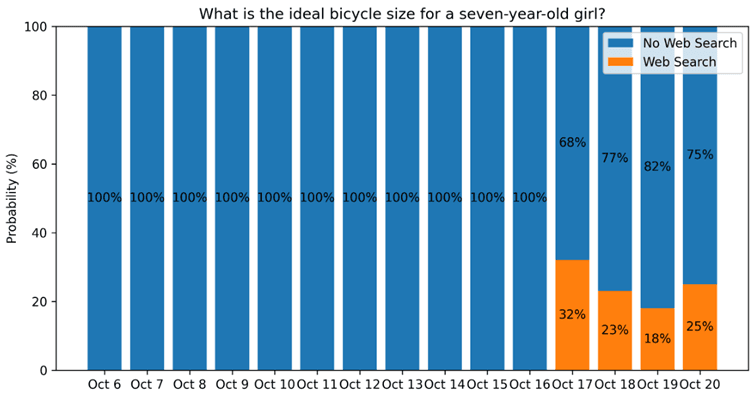

"What is the ideal bicycle size for a seven-year-old girl?" showed 0% search probability for 11 straight days. Predictable. Internal knowledge only.

Then on October 17, it jumped to 32%. And stayed there.

We did not see a model update. No explanation.

If you're monitoring AI performance monthly, you missed it. If you're not monitoring at all, you don't even know it happened.

Brand evaluation queries showed similar volatility. "Is this brand worth buying Abus Smiley 3.0?" fluctuated between 22% and 47% search probability. Your product gets recommended differently on Tuesday than Monday.

The Variables We Can't See

The research found patterns but zero root causes.

Why does one query maintain 100% stability while similar queries swing wildly? Why do probabilities shift on specific dates? What triggers these changes?

These blind spots create problems for e-commerce teams. You can't predict when AI tools will shift from internal knowledge to web searches. That affects response speed, and recommendation consistency.

Monitoring Isn't Optional Anymore

That 11-day plateau followed by a sudden shift? That's why point-in-time audits don't work.

You need continuous monitoring systems tracking when and how often your product categories trigger web searches. Changes in search behavior signal shifts in AI confidence, altered training weights, or modified cost parameters. All of it affects customer experience.

So what do you do?

Product categories with high volatility need special attention. Safety equipment. Brand evaluations. These might benefit from custom training data, explicit product feeds, or hybrid approaches that combine AI responses with structured product databases.

The goal? Consistency regardless of search behavior swings.

The Real Question

It's not whether AI changes behavior. It's whether you're monitoring when it does.

This research covers 15 days of bicycle queries. One vertical. One timeframe. One model. But the patterns suggest broader implications for anyone using AI in e-commerce.

The 50% ceiling. The tipping point behavior. The category-specific volatility. These aren't bugs. They're features of systems operating under constraints we can't see.

So monitor actively. Test continuously. Build redundancy where volatility runs high.